How to track the rank of keywords on Google using Python

Are you into marketing? In this tutorial, I will show you how to track the rank 🎯 of keywords in Google using Python 🐍. When you are done reading this tutorial, you will be able to save hundreds of dollars 💸 every year and track any keyword on Google you wish for.

Tracking keywords is a very common thing in marketing departments in almost any company. A lot of marketing teams are paying a lot of money for keeping track of their keywords in search engines.

If you are a new business this can be quite a costly thing and often this will be removed from the budget to make space for other stuff. To avoid this I have made a small Python tool that will crawl Google and keep you updated with the latest rank of your keyword.

What are we going to make?

In this Python tutorial, I will show you how to create a web scraper for Google search results. To do this we will be using Requests and Beautiful Soup.

Prerequisites

To follow along in this tutorial, you need the following tools:

- Requests installed using PyPi. Requests is a simple, yet elegant, HTTP library for Python.

- Beautiful Soup installed using PyPi. Beautiful Soup is a library that makes it easy to scrape information from web pages.

- Visual Studio Code.

- Python installed with Pip.

- Basic Python understanding, you can see my cheatsheet below, if you need any references.

This article was made in collaboration with Manthan Koolwal from ScrapingDog.

Let’s code

First, we need to install all the necessary libraries.

- Requests.

- Beautiful Soup - I will refer to this library as BF4 throughout this tutorial.

Create a folder by opening up your terminal (Linux / macOS) or Command Prompt (Windows) and then install these libraries. The libraries will be installed globally for the user, but the folder we are creating is for our project.

mkdir google_rank_tracker

pip install requests

pip install beautifulsoup4Okay, now we got the libraries installed, let's move on and write some actual Python code.

Start by creating a new file in the folder named google-rank-tracker.py. Open the file using Visual Studio Code and import the newly installed libraries, just like I have done below, at the very beginning of the file.

import requests

from bs4 import BeautifulSoupStructure of the URL for a Google Search

To fully understand the code/algorithm for this to work. I would like to explain a little bit of theory for you to get a better understanding of what is going on and why are we writing the code, the way we are.

The target URL we would like to scrape for our keyword will be dynamic as we can have different keywords, however, the primary URL for Google will remain the same for all scrapes.

The default structure of a Google URL is like the following: https://www.google.com/search?q={your_keyword_here} for this tutorial, I will be targeting the keyword fluent validation .net6 for the domain of this blog: https://blog.christian-schou/.

The target URL we are looking to scrape will turn into: https://www.google.com/search?q=fluent+validation+.net6.

Check if the domain is in the top 10 of search results using the keyword

The first task is to implement some logic to see if we got the domain in the top 10 search results on Google for the specific keyword.

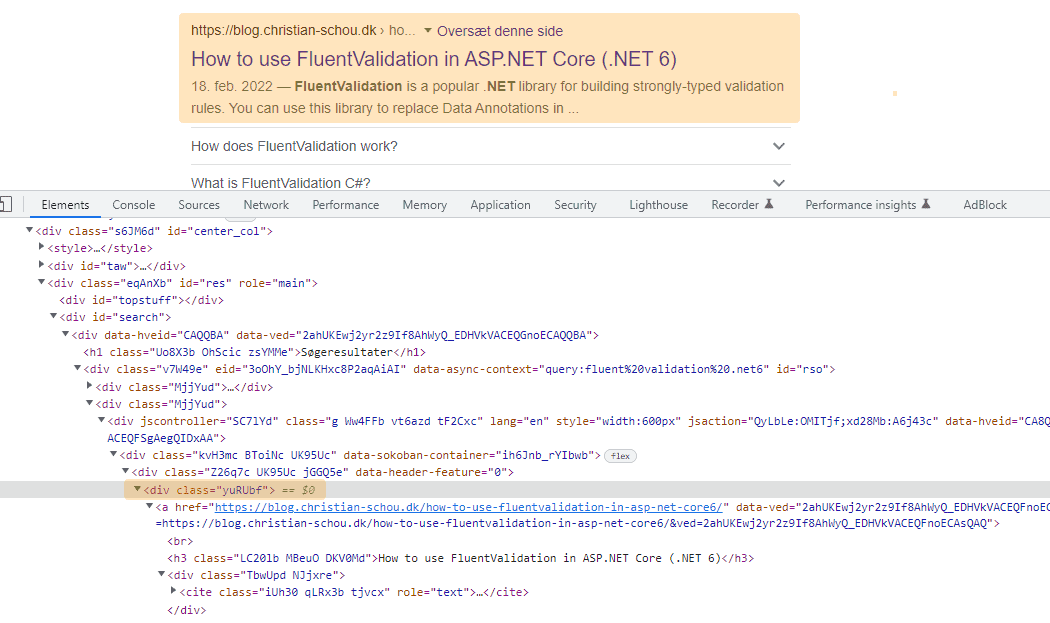

In order for us to be able to read the URLs in the search result, we have to figure out what class in the HTML the URLs are located inside. Right-click one of the results and select Inspect.

If you take a look at the elements making up the site, we can see that each of the search results is located inside the class MjjYud and the URL is located inside the class yuRUbf where we will be able to extract the value of the href tag.

To do this with Python code, we will first create a header for our request. This will look like a normal header when a user/normal person is making an HTTP request using their browser. The reason is that we declare a User-Agent and refer to acting like a normal browser and not as a crawler.

headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/104.0.0.0 Safari/537.36','referer':'https://www.google.com'}With the header in place, we can specify the target URL for our search (line 1), we then make a GET request to Google using the target URL and the header we created before and store the result in a new variable named response.

search_for_domain = "blog.christian-schou.dk"

target_url='https://www.google.com/search?q=fluent+validation+.net6'

response = requests.get(target_url, headers=headers)

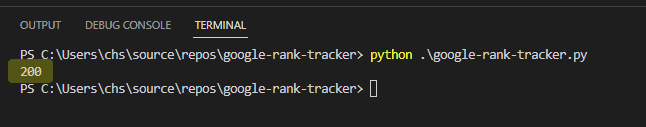

print(response.status_code)The response should result in a status 200, let's verify that by running our script.

Awesome! We got status 200 in return from Google. This means that we sent a valid request and got the response we need to build further on our keyword rank tracker for Google.

Now, the next milestone for this project is to find our domain. Let’s find it using BS4.

# Parse response into Beautiful Soup

bfs4 = BeautifulSoup(response.text,'html.parser')

# Extract all search results by looking up the first class

results = bfs4.find_all("div",{"class":"MjjYud"})What is going on here?

- We parse the

textof ourresponsefrom Google usinghtml.parserinside the BS4 library to create a tree of our HTML code we got returned from Google upon our request. - Finally we create a new

array(ResultSet) namedresultsand store the top 10 results from the search result.

Now that we have a list/array of the search results on page one of Google, let's iterate over them one by one to get the links for each result. To do this we have to create a for loop.

To do this, we have to go back to the top of the file and import a new library named urlparse.

import requests

from bs4 import BeautifulSoup

from urllib.parse import urlparseThe for loop we will be implementing looks like this, and I will explain with comments:

# Iterate over each result

for result in range(0, len(results)):

# Parse each url and look for the class yuRUbf to make get the correct URL

domain = urlparse(results[result].find("div", class_="yuRUbf").find("a").get("href")).netloc

# print(domain)

# If the domain we get from the url parser matches the one we are looking for

# set found to true, add 1 to the position as the index starts at 0 and break out

# if not found, we will set found to false

if(domain == search_for_domain):

found = True

position = result + 1

break;

else:

found = FalseWhat happens in the code above?

- We make use of the

urlparselibrary to parse out the domain from the link we retrieved in the list of search results from Google. - We then create a new variable named

foundand set it to true of we match thesearch_for_domainvalue. If we get a match, we will update the position with 1 as the index is starting at position 0 and break out, as we got what we are looking for. - If the domain wasn't found in the results, we will show a message that it was not found in top X.

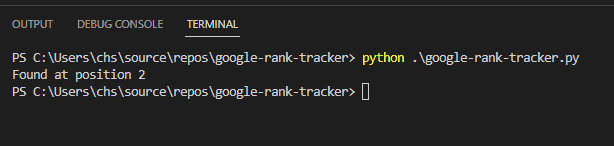

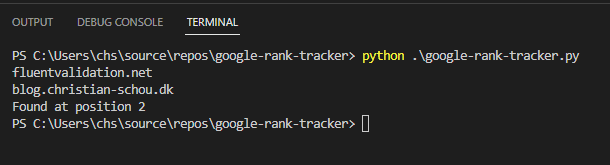

Let's run the script and see the result:

If you would like to see the domains we got until we reached our own domain, you can remove the # from the line print(domain). This will print out each domain in the result set when we iterate over them.

The request was a success and we located the domain to be at position no. 2! The script right now looks like this:

# Import necessary libraries

import requests

from bs4 import BeautifulSoup

from urllib.parse import urlparse

# Declare request header to look like a normal person and not a crawler

headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/104.0.0.0 Safari/537.36','referer':'https://www.google.com'}

# The domain we would like to match against in our search results

search_for_domain = "blog.christian-schou.dk"

# Define target URL to search for keyword

target_url='https://www.google.com/search?q=fluent+validation+.net6'

# Send request to Google for our target URL using the "normal" headers

response = requests.get(target_url, headers=headers)

# Response status code from Google

#print(response.status_code)

# Parse response into Beautiful Soup

soup = BeautifulSoup(response.text,'html.parser')

# Extract all search results by looking up the first class

results = soup.find_all("div", class_="MjjYud")

# Iterate over each result

for result in range(0, len(results)):

# Parse each url and look for the class yuRUbf to make get the correct URL

domain = urlparse(results[result].find("div", class_="yuRUbf").find("a").get("href")).netloc

# print(domain)

# If the domain we get from the url parser matches the one we are looking for

# set found to true, add 1 to the position as the index starts at 0 and break out

# if not found, we will set found to false

if(domain == search_for_domain):

found = True

position = result + 1

break;

else:

found = False

# We found the domain we are looking for

if(found == True):

print("Found at position", position)

# We did not find the domain we are looking for

else:

print("Not found in top", len(results))What if the domain wasn't found in the top 10 results? I usually only look in the top 100 results, else I take for a domain that is unranked. To read through the top 100 results, we have to change the URL target. To do this we can add the parameter &num=100 to the URL for the search request.

The target URL would become https://www.google.com/search?q=scrape+prices&num=100.

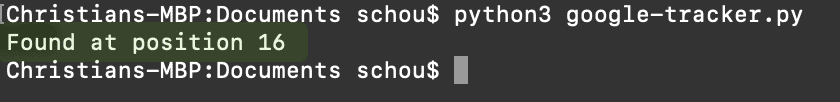

Now let's run it one more time but for another search query, as we already know that the result will be in the top 10 results. Let's search for Scrape Prices. You can read that article right here:

The target URL has been updated to: https://www.google.com/search?q=scrape+prices&num=100. Let's run the script again and see if we can find the domain we are targeting.

As you can see we found our target_url at position 16 in the Google Search Results (SERPs). This means that when you search for scrape prices in Google and the country you are searching from is Denmark, you will find my post at position no. 16 in the SERPs. The position will of course be different depending on the country you are searching from.

With this simple Python script, you are now able to track the rank of any keyword in Google. If you would like to search the keyword from different countries you can do so by using a proxy located in that specific country. (that is beyond scope of this post).

Automating the SERP tracker

If you got a lot of keywords you could automate the process using a spreadsheet and the schedule library for Python. This would allow you to iterate through a list of keywords and then pick the options from the spreadsheet for each keyword.

You can read more about the schedule library below:

Implementation of schedule

By using the library schedule you will be able to schedule the report to run every day, hour, minute, second, etc... It is a good idea to track your keywords if you put in a lot of energy in making great content, or spend money to make it great, etc...

Let's say you would like to run the report every day. The only thing we have to do is add the logic from our tracker into a definition and call the definition using schedule.

Below is a simple example of how schedule works.

Method #1 - Run a job every x minute.

- We import

schedule. - We define a new method named

greet(). - We schedule the

greet()method to be called every 5 seconds. - While True is true, we run any pending schedules on the default scheduler. (to stop the script, press

CTRL + C.

import schedule

def greet():

print("How are you?")

schedule.every(5).seconds.do(greet)

while True:

schedule.run_pending()Method #2 - Use a decorator to schedule a job.

This time we remove the schedule function and replace it with a decorator. We pass it an interval using the same syntax as above while omitting the .do().

import schedule

@repeat(every(5).seconds)

def greet():

print("How are you?")

while True:

schedule.run_pending()The result for both of the methods are:

$ python schedule-demo.py

How are you?

How are you?

How are you?

... (until you stop it using CTRL + C)Now that we know how schedule works, let's use it to automate the SERP tracker we wrote before. The only thing we have to do is move our SERP keyword tracker logic into a method. Let's name it rank-tracker(). Below is the implementation for you to copy, which I will explain below.

# Import necessary libraries

import requests

import schedule

import time

from bs4 import BeautifulSoup

from urllib.parse import urlparse

@repeat(every(1).days)

def rank-tracker():

# Declare request header to look like a normal person and not a crawler

headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/104.0.0.0 Safari/537.36','referer':'https://www.google.com'}

# The domain we would like to match against in our search results

search_for_domain = "blog.christian-schou.dk"

# Define target URL to search for keyword

target_url='https://www.google.com/search?q=scrape+validation+.net6&num=50'

# Send request to Google for our target URL using the "normal" headers

response = requests.get(target_url, headers=headers)

# Response status code from Google

#print(response.status_code)

# Parse response into Beautiful Soup

soup = BeautifulSoup(response.text,'html.parser')

# Extract all search results by looking up the first class

results = soup.find_all("div", class_="MjjYud")

# Iterate over each result

for result in range(0, len(results)):

# Parse each url and look for the class yuRUbf to make get the correct URL

domain = urlparse(results[result].find("div", class_="yuRUbf").find("a").get("href")).netloc

# print(domain)

# If the domain we get from the url parser matches the one we are looking for

# set found to true, add 1 to the position as the index starts at 0 and break out

# if not found, we will set found to false

if(domain == search_for_domain):

found = True

position = result + 1

break;

else:

found = False

# We found the domain we are looking for

if(found == True):

print("Found at position", position)

# We did not find the domain we are looking for

else:

print("Not found in top", len(results))

while True:

schedule.run_pending()

time.sleep(1)Now we have moved the rank-tracker logic into a method and we have added a decorator on the method/definition telling it to run every day. The job is being run by schedule.run_pending(). I have updated the rank tracker script with a time.sleep(1) - because it was in the official schedule documentation. Remember to import the time library, or else you will get an error at the runtime of the script.

Summary

Python is a very powerful programming language and is easy to get going with. By using PIP we can easily extend the application functionality without having to reinvent the wheel again.

In this tutorial, you learned how easy it is to scrape Google for domain rankings based on a keyword and a target URL. You learned to automate the task using the schedule library.

Have you extended the script with extra functionality? Let me know in the comments about your additions. If you got any questions, please let me know and I will assist you. Until next time - Happy coding! ✌️